How to create Application Load Balancer using Terraform? (AWS ALB | HTTPS)¶

- You can find the source code for this video in my GitHub Repo.

Intro¶

In this video, we will create an application load balancer in AWS using Terraform.

- In the first example, we will manually register EC2 instances to the load balancer. This approach is useful when you have a stateful application, and you cannot use an auto-scaling group.

- In the second example, we will use an auto-scaling group that will automatically spin up new EC2 instances based on the load and registers them with the target group that belongs to the load balancer. It's perfect for stateless applications such as REST APIs or other web services.

For the demo, we will create a Golang REST API and use Packer to build an AMI image. Packer build will also include a test suite to verify that our app is properly installed.

Create Golang REST API¶

First of all, we need an application that we can use to create a demo with the application load balancer. I decided to build one instead of using one of the open-source demo apps for two reasons.

-

First, I want my app to serve traffic and expose health check on different ports. Typically you would have an app that listens on port

8080, for example, and it may have a very simple health check for DevOps to quickly check the status of the app. It may be exposed to the Internet as well. But you also want to implement a proper health check endpoint that can verify all necessary dependencies for the app. It can check the database connection, response duration, etc. Those health checks should not be exposed to the Internet and only be used by your monitoring tools and a load balancer to route traffic to only healthy hosts. Our app will listen on port8080, will have a simple/pingendpoint, and expose a health check on port8081. -

The second reason I want to show you is how to build a packer image and run tests to verify that the app is installed correctly.

I chose to use Go lang for the app. We will use a Gin web framework to build a simple REST api. Let's import all necessary libraries. To create two http web servers, we would need an errgroup.

| my-app/main.go | |

|---|---|

Next, let's create a getHostname handler for the /hostname endpoint.

It returns the hostname reported by the kernel of the node where the app is running.

| my-app/main.go | |

|---|---|

Then create another handler for the health check. Right now, it returns JSON with status ready, but in practice, it should check all the dependencies for the app, such as database connection.

| my-app/main.go | |

|---|---|

Optionally you can have an endpoint to quickly check the status of the app. It can be exposed to the internet.

| my-app/main.go | |

|---|---|

Now we need to create two routes. The first main router will provide /hostname and /ping endpoint on port 8080.

| my-app/main.go | |

|---|---|

The job for the second router is to only serve the /health endpoint on port 8081.

| my-app/main.go | |

|---|---|

In the main function, we need to initialize servers and associate them with corresponding routers. Then spin up goroutines for the main server and for the health server.

You can find go.mod and go.sum in my GitHub repository.

Create AWS VPC¶

As always, to make this tutorial self-contained, let's create AWS VPC using terraform.

We will start with defining aws provider and setting up version constraints for the provider and terraform itself.

| terraform/0-provider.tf | |

|---|---|

Then the VPC resource with CIDR range /16, which will give us around 65 thousand IP addresses.

| terraform/1-vpc.tf | |

|---|---|

Internet Gateway to provide internet access for public subnets.

| terraform/2-igw.tf | |

|---|---|

Then let's define two private and two public subnets.

NAT Gateway to provide Internet access in private subnets along with Elastic IP address.

| terraform/4-nat.tf | |

|---|---|

Finally, the route tables to associate private subnets with NAT Gateway and public subnets with Internet Gateway.

To create all these networking components, initialize terraform and apply.

Create Packer Image¶

Now let's create a packer AMI image and install my-app. We're going to use this image in the terraform code to create EC2 instances in the first example and to create a launch template and auto scaling group in the second one.

Similar to terraform, we can explicitly require providers and set version constraints.

In this case, we want an amazon provider equal to or higher than 1.1.4.

| packer/my-app.pkr.hcl | |

|---|---|

Next is the amazon-ebs Packer builder that is able to create Amazon AMIs backed by EBS volumes for use in EC2.

- In this resource, we can define the AMI

image name; to make it unique, we can use atimestamp. - Depending on the resource requirements for the build, you can select the appropriate

instance_type. This is not the instance type to run the app; it's a temporary EC2 instance to build the image only. It will be destroyed right after the Packer finishes the installation. - We also need to specify where we want to build this image; in my case, it will be

us-east-1. - From the AWS console, find the

IDof the public subnet with the default route to the internet gateway and use insubnet_id. You can use private subnets only if you have a VPN or you run Packer inside the AWS VPC. - To find a base image (

Ubuntu 22.04 LTS) for Packer in our region, we can usesource_ami_filter. - Then based on your Linux distribution, specify the default user for Packer to ssh.

For Ubuntu, it's an

ubuntuuser; if you use Amazon Linux 2 or the Amazon Linux AMI, the user name isec2-user, and so forth.

Then the build section.

- For the source, select the

amazon-ebsresource that we just created. - To copy local files from your machine where you run Packer, you can use file provisioner. Always copy your files to the temporary directory and then use scripts or inline commands to move them to the correct destinations. We will create all these local files in a minute.

- To execute arbitrary commands inside the EC2, you can use a script or scripts if you have more than one that you want to execute.

- Also, you can use inline commands; for example, we need to move the

systemd service file to

/etc, start the service, and enable it. - Optionally you can run some tests for your image, but for that, you need to additionally install inspect tool on your machine. You can skip this step by commenting out this provisioner.

Next, we need to create all those local files. Let's start with the systemd service definition.

- The important part here is to specify the

ExecStartcommand and configureRestartif the app exits or fails for some reason. - You can also declare environment variables; for example, set

GIN_MODEtoreleaseto run this app in production mode.

| packer/files/my-app.service | |

|---|---|

Then the script to install our Go app. Typically you would have a CI pipeline that builds the source and produces the binary you can install on the EC2 instance. You don't need to install golang on ubuntu; it's just to simplify this process. You can use exactly the same script and install this app from my repo.

| packer/scripts/bootstrap.sh | |

|---|---|

Optionally you can create a test suit for your AMI image.

| packer/inspec/inspec.yml | |

|---|---|

To create tests, you can use ruby language and built-in functions to test different scenarios. For example, we can verify that the systemd file exists and that the service is installed and running.

| packer/inspec/controls/test-my-app.rb | |

|---|---|

We're ready to build the AMI image with Packer.

Create AWS ALB with EC2 backend¶

In the first example, we will create an application load balancer and manually register EC2 instances. This approach is common when you have a stateful application or maybe even a database where you don't want to use an auto-scaling group.

When you need to create multiple EC2 instances or any other resources, the best way is to use a map instead of a count variable.

In that case, you can target individual instances, for example, if you need to scale one single EC2 or increase a disk size.

In this example, we have two instances of the app with instance type and subnet id to spread them between different availability zones.

| terraform/6-example-1.tf | |

|---|---|

The application load balancer can have its own security group compared to the network load balancer that inherits security rules from the EC2 instances.

Since we need to create two security groups and reference them in each other, we need to use the aws_security_group terraform resource to create an empty

security group and separately aws_security_group_rule to open ports. Otherwise, you will get a cyclic dependency error.

Create one security group for the EC2 instances and the second one for the application load balancer.

| terraform/6-example-1.tf | |

|---|---|

- Now we need to open the main

8080port from the application load balancer to the EC2 instance. - Then the

8081port for the health check. - When you create a security group with terraform, it will automatically remove all egress rules.

If your app requires internet access, you can open it with the

full_egree_ec2resource, which is a good starting point.

Next, firewall rules for the application load balancer.

- First, we want to open port

80for all incoming requests from the internet. In the following example, we will useHTTPS. - Then we need to open the egress rule to redirect requests to the EC2 instances. Also, we need one rule for the health check.

Now we can create EC2 instances.

- To iterate over the map object with EC2 instances, you can use the

for_eachfunction. - Then specify the

AMI IDthat you got from the Packer output, or you can find it in the AWS console under Amazon Machine Images. - We can use the

machine_typeattribute for instance type. - If you plan to ssh to the instance, you can specify the

key_name. I would suggest to use a session manager instead. - Then the

subnet_idis where to create the virtual machine. - Finally, use the security group that we defined earlier.

| terraform/6-example-1.tf | |

|---|---|

The application load balancer uses the target group to distribute traffic to your application instances.

- Our app listens on port

8080using the plainHTTPprotocol. - You need to provide a

VPC id, and optionally you can configureslow_startif your application needs time to warm up. The default value is0. - Then you can select the algorithm type; the default is

round_robin, but you can also chooseleast_outstanding_requests. - There is also an optional block for

stickinessif you need it; I will disable it for now. - The health check block is very important. If the health check on the EC2 instance fails, the load balancer removes it from the pool.

First, you need to

enableit. Then specify port8081for the health check. The protocol isHTTPand/healthendpoint. You can customize the status code. To indicate that the instance is healthy, I send a200status code.

To manually register EC2 instances to the target group, you need to iterate over each virtual machine and attach it to the group using the traffic 8080 port.

| terraform/6-example-1.tf | |

|---|---|

Now the load balancer itself. There are two schemas available to you in AWS.

- You can make the load balancer private, which can be accessed only within VPC, or make it public to accept requests from the internet.

- Then the load balancer type, which is the

applicationload balancer and dedicated security group that allows requests on port80and outbound traffic to EC2 instances on ports8080and8081. - Optionally you can enable

access_logsthat can be stored in an S3 bucket. In the log, you can find who initiated the connection to your load balancer. It can be costly if you have a lot of traffic. - For the load balancer, you also need to specify

subnetswhere you want to provision it. Since it's a public load balancer, I will pick public subnets.

Each load balancer needs a listener to accept incoming requests.

- In the listener, you can define the port; in our case, it's

80, and a protocol which is plainHTTP. - Also, you need an

default_actionto route traffic somewhere. Here we forward all incoming requests to our target group on port8080.

| terraform/6-example-1.tf | |

|---|---|

We are ready to create the first load balancer. Head over to the terminal and apply terraform.

You can get the hostname of the load balancer and use it to test the app.

First, let's try the /ping endpoint with curl. It should return {"message":"pong"} and 200 status code.

Also, you can access the /hostname endpoint.

But if you try to hit the /health endpoint, you'll get 404 page not found because it is only accessible by the application load balancer.

Create AWS ALB with Auto Scaling Group¶

In the second example, instead of manually registering EC2 instances, we will attach an application load balancer to the auto-scaling group. Based on the load, it can scale up or down the number of EC2 instances to handle the traffic.

For this example, we don't need a map with EC2 instances, but we need the same security groups. We can reuse security groups from example one, but to make it a self-contain example, let's quickly recreate them.

| terraform/7-example-2.tf | |

|---|---|

Then the same firewall rules for the EC2 security group.

Now for the application load balancer, we need to open an additional 443 port to handle HTTPS traffic.

Instead of creating EC2 instances, we need to define launch_template.

The auto-scaling group will use it to spin up new VMs.

- Replace

image_idwith the packer IAM image andkey_namewith yours.

| terraform/7-example-2.tf | |

|---|---|

The target group and a health check are exactly the same.

| terraform/7-example-2.tf | |

|---|---|

The auto-scaling group will be responsible for creating and registering new EC2 instances with the load balancer.

- You can specify min and max sizes for the group, but it's not enough to scale it automatically. It just defines the boundaries.

- We want to create a load balancer in public subnets, but for the nodes, we want to keep them in private subnets without direct internet access.

- To register this auto-scaling group with the target group, use

target_group_arns. - Then provide the

launch_template, and you can override the defaultinstance_type, such ast3.micro.

To dynamically scale your auto-scaling group, you need to define a policy. In this example, we use CPU as a threshold.

If the average CPU usage across all virtual machines exceeds 25%, add an additional EC2 instance. In production, you would set it closer to 80%.

Next is the same load balancer resource; the only difference for this load balancer is that we open an additional 443 port in the security group.

| terraform/7-example-2.tf | |

|---|---|

Now the listener, let's start with the same port 80.

Sometimes you have a requirement to accept the HTTP requests on port 80 and redirect them to secured port 443.

We will add that rule later.

| terraform/7-example-2.tf | |

|---|---|

Let's apply the terraform again and test the application load balancer.

Use the /ping endpoint to check the status of the Golang app.

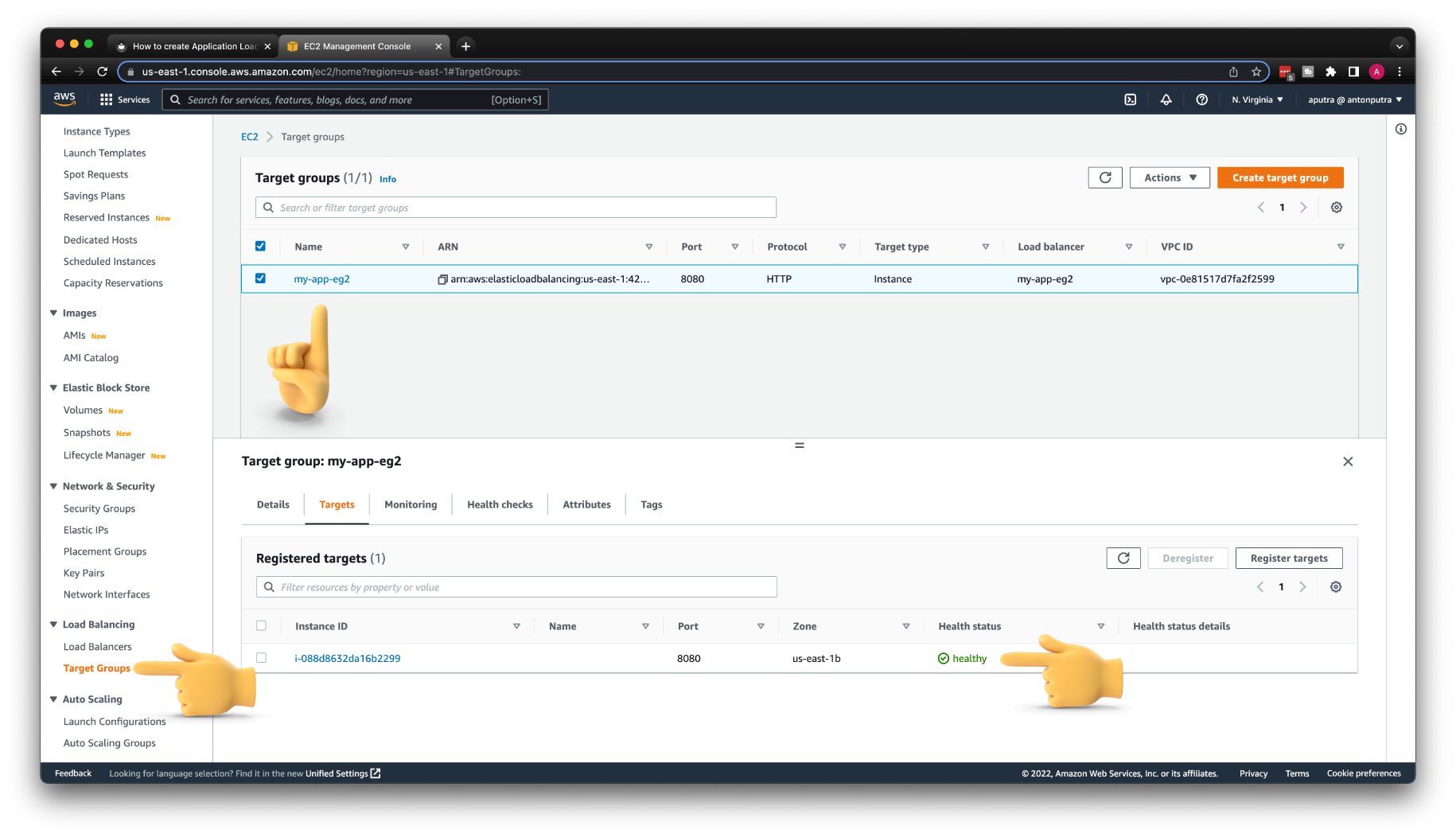

If it fails, check if you have healthy targets in the target group. The same applies to the previous example.

Secure ALB with TLS certificate¶

The next step is to secure your app with HTTPS. For that, we need to create a custom domain and obtain a certificate.

Since I already have Route53 hosted zone, instead of resource type, I can use data to get a reference.

You need to replace the name with your hosted zone.

| terraform/7-example-2.tf | |

|---|---|

To get a TLS certificate, you can use the aws_acm_certificate resource and provide a fully qualified domain name.

In my case, it's api.antonputra.com.

| terraform/7-example-2.tf | |

|---|---|

To prove that you own a domain, you need to create a TXT DNS record.

In case we request a certificate for multiple domain names, let's iterate over all domains and create the necessary DNS records.

There is a special terraform aws_acm_certificate_validation resource that can wait till the TLS certificate is issued. It's optional, but sometimes other resources may depend on a valid certificate to proceed, such as API Gateway.

| terraform/7-example-2.tf | |

|---|---|

Create a DNS record for your load balancer. You can use CNAME or, in AWS, it is preferable to use ALIAS records.

It can be directly resolved to the IP address, which improves the speed of DNS lookup.

| terraform/7-example-2.tf | |

|---|---|

We have a custom DNS record and a valid certificate; now, we can create an HTTPS listener on our load balancer.

You need to specify the certificate_arn and optionally can adjust the ssl_policy to match your requirements for clients.

Then the same default_action that will forward requests to the same target group on port 8080.

The application load balancer will terminate TLS and create a new TCP connection using the plain HTTP protocol to your EC2 instances.

Frequently we want to accept requests on port 80 but immediately redirect requests on secured port 443 and use HTTPS protocol.

Finally, let's create an output variable to return the custom load balancer endpoint.

| terraform/7-example-2.tf | |

|---|---|

Go back to terraform and run apply.

Test your app with HTTPS and a custom domain.

If you try to use HTTP, the load balancer will redirect and enforce HTTPS.